Conversational Speech Synthesis(CSS) intends to give speech expression in conversation in proper intonation and tone and with appropriate emotion. However, due to the lack of the speech dataset of emotional conversation and the difficulty in emotion modeling, there were no in-depth studies of emotion understanding and emotion rendering in the previous research. The research team of Research Fellow Liu Rui with the College of Computer Science(College of Software) of IMU has proposed, in cooperation with the research team of Tiktok(Singapore), a new model for speech synthesis in emotional conversation, called ECSS, which greatly improves the naturalness and emotional expressiveness of synthesized speech in conversation.

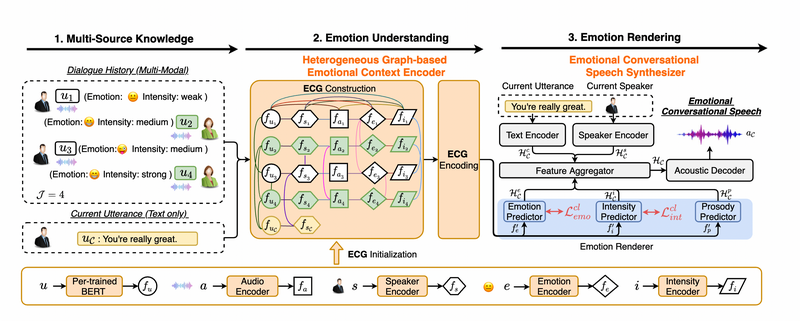

Figure 1 Model architecture of ECSS

First, in terms of emotion understanding in conversation, a heterogeneous graph-based emotional context modeling mechanism is introduced to model the context of conversation with the multi-source conversation history knowledge like conversation texts, voices, identities of speakers, emotion categories and emotion intensity as input, to exactly understand the emotional clues in conversation context. Second, in light of emotion rendering in conversation, the contrastive learning-based emotion rendering module is proposed to precisely infer the emotion style of the target speech so as to accurately render the conversational emotion. The results of the experiments showed that the model proposed in this research is greatly superior to the baseline model in the naturalness and emotional expressiveness of the speech in the conversation. It provides a new path for the development of conversational artificial intelligence.

The article titled “Emotion Rendering for Conversational Speech Synthesis with Heterogeneous Graph-Based Context Modeling” has been accepted by the 38th Annual AAAI Conference on Artificial Intelligence(AAAI 2024), a Class A international conference on artificial intelligence recommended by China Computer Federation(CCF). AAAI is one of the international top conferences on artificial intelligence hosted by the Association for the Advancement of Artificial Intelligence which gathers together the top experts on artificial intelligence. It has been the vane of the research of artificial intelligence and long enjoyed reputation in the academic circle.

The authors of the article include Research Fellow Liu Rui(co-first author) and Hu Yifan(co-first author) who is a PhD student admitted to IMU in 2023 and Ren Yi, a young scientist of Tiktok(Singapore). The research was supported by the National Natural Science Foundation of China for Young Scholars, “Grassland Talent” Program of Inner Mongolia Autonomous Region(IMAR), the Program of IMAR for the Launch and Support of Innovation and Business Startups of Returned Overseas Chinese Students, Open Project of Guangdong Province Digital Twin Key Laboratory, IMU Program for Newly Joined High-Level Talents through Steed Program and IMAR Program for the Support of Scientific Research of Newly Joined High-Level Talents.